The best piece of writing I have come across in the last week or so is a chapter from the Bromley and Paavola book on environmental economics that I have been reading. By A. Allan Schmid, it is called “All Environmental Policy Instruments Require a Moral Choice as to Whose Interests Count.” The argument is that the idea of solving environmental problems in a purely technical way (internalizing externalities, to borrow from the economics lingo) is impossible. When a policy is represented that way, there is always a moral choice being concealed. In tort law, this becomes explicit through an instrument called nuisance.

If my neighbours are making homemade beer and the process produces a constant cloud of nasty smelling gas that wafts into my yard and through my windows, I could seek remedy in court. It would then be decided whether or not the smell constitutes nuisance. If not, the court effectively grants a right to produce the smell to my neighbours. I would then be free to try to convince them to use that right differently, for instance by paying them not to make beer.

If the court rules in my favour one of two things can take place. They can grant an injunction, forbidding my neighbours to make beer without my permission. This is great for me, since I can effectively sell them the right to make beer if the amount they are willing to pay exceeds the amount the smell bothers me. This is what Coase is alluding to in his argument that it doesn’t matter who you assign rights to, as long as bargaining can occur (See: Coase Theorem). Of course, he ignores the distributional consequences of assigning the rights one way or another. As an alternative to an injunction, the court can fix a set amount of damages to be paid. This relieves the nuisance, but gives me less scope to take advantage of the court’s decision.

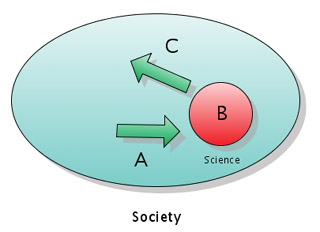

What the example illustrates is that in creating policies to deal with externalities, the rights in question must be effectively assigned to one party or another. We either assign companies the right to pollute, which people around them can negotiate for them not to do, or we assign those people the right not to live in a polluted place, in which case the company has to go to them with an offer. The assigning of rights, then, isn’t a mere technical instrument for achieving an environmental end, but a matter of distributive justice.

Consider the case of fisheries access agreements in West Africa. West African governments have the sovereign right to exploit the waters in their Exclusive Economic Zones (EEZs). They can also choose to sell that right, as many have done, to the EU. The governments end up getting about 10% of the value of the fish that are caught, while suffering the loss of future revenue that is associated with the depletion of the fisheries (since they are exploited at an unsustainable level). In this case, the distributional consequences of West African governments being rights holders are fairly adverse. The incentives generated inflict harm on the life prospects of those whose protein intake previously came from fish caught by artisinal fisheries now rendered less productive due to EU industrial fishing. Likewise, the life prospects of future generations of citizens are harmed.

One of the best bits of the Schmid piece is the following:

A popular phrase contrasts “command and control” with voluntary choice. Another contrasts “coercive” regulations with “free” markets. This is mischievous, if not devious. At least, it is certainly selective perception. First of all, the market is not a single unique thing. There are as many markets as there are starting place ownership structures. I personally love markets, but of course I always want to be a seller of opportunities and not a buyer. Equally mischievous is the idea that externalities are a special case where markets fail. Indeed, externalities are the ubiquitous stuff of scarcity and interdependence.

He puts to paid the idea that there is a tradeoff between economic efficiency and moral principles. That is simple enough when you realize there is an infinite set of economically efficient outcomes, given different possible preferences and starting distributions.

Those wanting to read the entire piece should see: Schmid, A. Allen. “All Environmental Policy Instruments Require a Moral Choice as to Whose Interests Count.” in Bromley, Daniel and Jouni Paavola (eds). Economics, Ethics, and Environmental Policy: Contested Choices. Oxford : Blackwell Publishing. 2002. pp. 133-147.