As part of playing around with my GPS data from my exercise walks since I wanted to make a heat map showing the likelihood of being in any particular place.

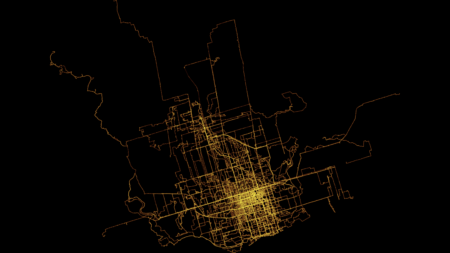

Here’s one I made using Seth Golub’s heatmap.py Python script (radius 5, decay 0.75):

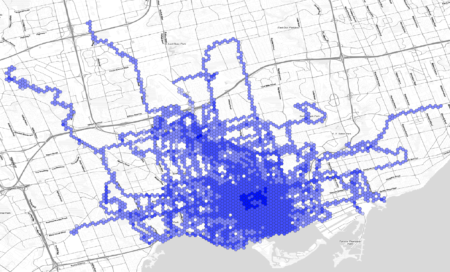

I also made one in QGIS. First I converted the tracks to a set of points in a CSV file. Then I created a spatial index of the point layer, created a hexagonal grid of polygons of a sensible size, and counted the number of points in each. Then I rendered that as brighter or darker hexagons:

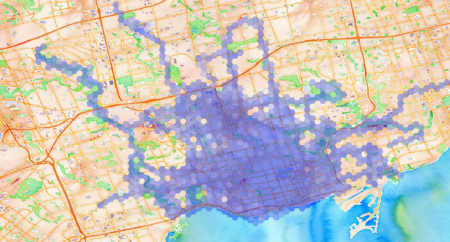

Here are the hexagons semi-transparent and rendered on a faux watercolour of the Toronto area:

The walks have sometimes been lonely and sometimes been scary, but they have been the main thing getting me out of the house and providing exercise during the pandemic. They do make me feel like I have a broader understanding of the city, though walking through a neighbourhood at night with headphones on only gives you a certain kind of perception.